Outcome Value

Ensuring the robustness of neural networks against real-world perturbations is critical for their safe deployment. Existing verification techniques struggle to efficiently handle convolutional perturbations due to loose bounding techniques and high-dimensional encodings. Our work advances the state-of-the-art by providing a method that offers both precision and scalability, allowing verification toolkits to consider larger networks. By certifying robustness under realistic conditions such as motion blur and sharpening, the approach helps mitigate risks associated with adversarial attacks and hidden weaknesses in practical applications.

Summary

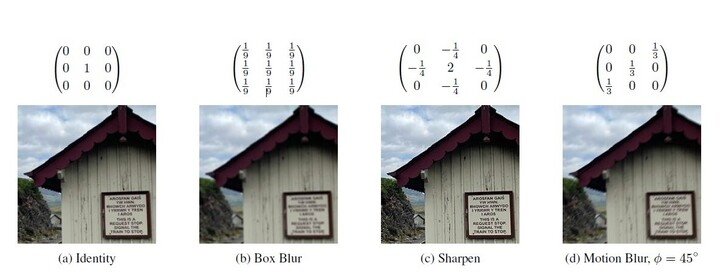

Neural networks have become integral in safety-critical applications such as autonomous driving and medical diagnosis, making their reliability extremely important. The paper introduces a novel verification method designed to verify the robustness of neural networks against convolutional perturbations, such as motion blurring and sharpening. We achieve this by parameterising perturbation kernels in a way that preserves key kernel properties while allowing for controlled variations in perturbation strength. By integrating the convolution of inputs with these kernels into the verification process, our method ensures tighter bounds and enables robustness certification to scale to large networks where existing methods fail.

Primary contributions

– We introduce a technique for linearly parameterising kernels that encode perturbations including motion blur, box blur, and sharpening effects. These parameterised kernels allow for a variation of the perturbation strength applied to a given input.

– We present a theorem that demonstrates the ease of convolving an input with a parameterised kernel and design layers which can be prepended to a neural network in order to encode the perturbations of interest into the network.

– Our experimental evaluation demonstrates that due to the low dimensionality of our encoding, input splitting can be used to quickly perform robustness verification and scale to architectures and network sizes which are out of reach of an existing baseline.

What’s next

While this paper represents a significant step forward in verifying neural networks against convolutional perturbations, the challenge of scalability remains. As a next step, we’ll focus on further optimising the introduced method and exploring alternative verification techniques that handle more complex perturbations in conjunction with our perturbations. Additionally, we’ll investigate ways of integrating other realistic perturbations into verification approaches to enable a broader range of perturbations to be considered when assessing the safety of AI systems.

Link to paper