Brueckner, B., Kouvaros, P., Highlights from SAIV 2025

How can we truly trust an AI system?

As models learn from data in ways that aren’t always transparent, ensuring they are safe and reliable, especially in critical applications, is one of the most important challenges in technology today.

This is the central question that drives the Symposium on AI Verification (SAIV). This July, the event brought the researchers in formal verification and artificial intelligence to Zagreb, Croatia. Held alongside the prestigious Conference on Computer Aided Verification (CAV), it was the perfect place to discuss the latest breakthroughs in making AI safe. Naturally, the Safe Intelligence team was there, and we came back inspired.

A few presentations stood out to us for their direct relevance to the work we do every day. Here are some of our key takeaways:

Making AI More Efficient, Without Sacrificing Safety

Making AI models smaller and faster is crucial for real-world use. But how do you ensure that a streamlined “pruned” model is just as trustworthy as the original? Samuel Teuber (Personal Site, Bluesky) presented a new method for differential verification. His framework can formally prove that a smaller model behaves identically to its larger counterpart across vast scenarios, offering significant speedups over existing techniques. (Link to Paper)

Verifying AI in the Physical World: A Drone Case Study

Applying AI to physical systems like drones is notoriously difficult because real-world physics are incredibly complex. Colin Kessler (LinkedIn) shared a fascinating case study on creating robust controllers for gliding drones. His work demonstrates how clever simplifications can make it possible to verify these complex systems despite the various nonlinearities which arise when modelling such a system. His work was especially interesting because it pushes today’s verification tools to their absolute limits and shows how better training can lead to safer AI controllers. (Link to Paper)

Testing AI Vision Against Realistic Challenges

For a long time, testing the robustness of computer vision models involved adding simple “white noise” or static to an image. But that’s not what AI encounters in the real world. Jannick Strobel (https://www.sen.uni-konstanz.de/members/research-staff/jannick-strobel/ ) proposed novel properties for verifying the robustness of computer vision models which rely on image similarity measures. Although the similarity measures introduce nonlinear relations which impact the scalability of the method, the work is a great contribution towards being able to check the robustness of models exposed to more semantically meaningful input changes. (work not published yet, so no link, only https://www.aiverification.org/2025/talks/poster7/ )

VERONA: An Open-Source Toolkit for Better Experiments

For anyone in the field, comparing different verification tools can be a complex and time-consuming task. Annelot Bosman (https://www.universiteitleiden.nl/en/staffmembers/annelot-bosman#tab-1 ) presented VERONA, an open-source “experiment box” that makes it much easier to run and compare multiple verification toolkits at once. This is a great practical tool for accelerating research in the community. (Link to GitHub)

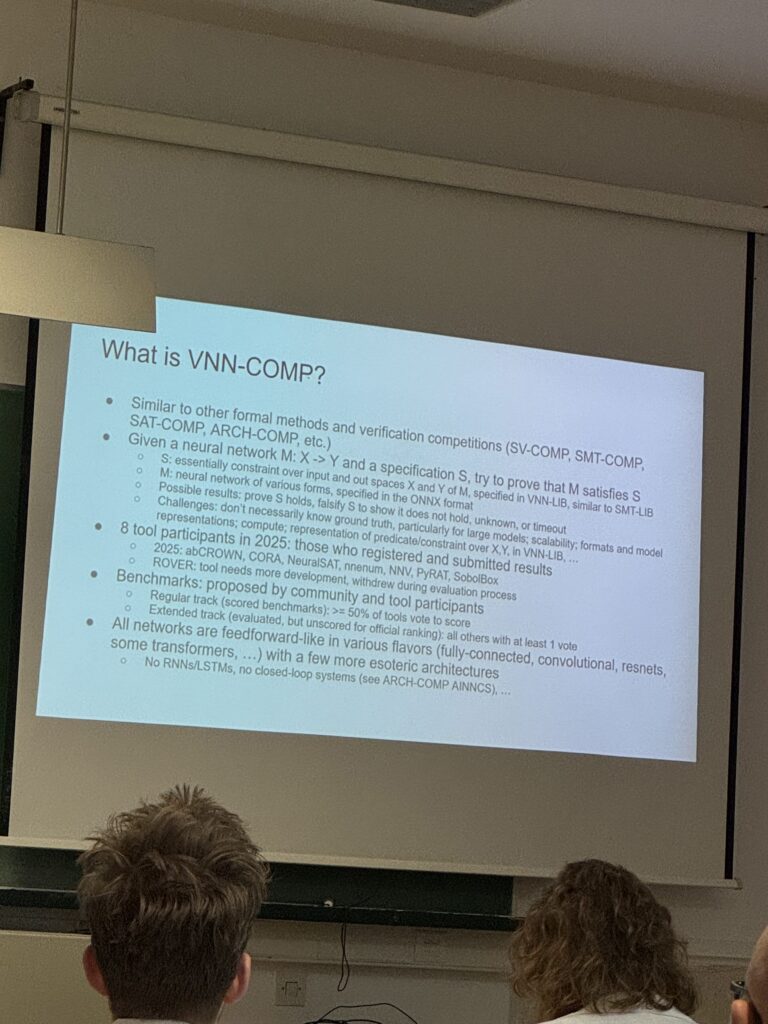

A Highlight: The Verification of Neural Networks Competition (VNN-COMP)

Konstantin Kaulen (https://rwthcontacts.rwth-aachen.de/person/PER-SF4C2YW ) presented the results of this year’s Verification of Neural Networks Competition (VNN-COMP) which was certainly a highlight at the symposium and saw the largest attendance.

- 🥇 1st Place: The champion for several years running, alpha-beta-CROWN, held its top spot with its powerful GPU-accelerated bound propagation approach.

- 🥈 2nd Place: NeuralSAT took an impressive second place using a completely different strategy for robustness verification based on ideas from mathematical logic and backtracking.

- 🥉 3rd Place: The abstract interpretation-based PyRAT secured a strong third place.

Seeing different techniques being employed across the field is definitely encouraging that there are many ways that could lead to even more scalable verifiers in the future, and besides the top three places there were various other tools that also achieved excellent results. Those are listed in the full results (https://docs.google.com/presentation/d/1ep-hGGotgWQF6SA0JIpQ6nFqs2lXoyuLMM-bORzNvrQ/ ). Konstantin further presented a work on terminating robustness verifiers early to save time when verification is not expected to terminate within the given time budget (https://ojs.aaai.org/index.php/AAAI/article/view/34946) and a library for the certified training of neural networks (https://openreview.net/forum?id=bWxDF6HosL).

From “Probably” to “Provably”: Guaranteeing Performance on New Data

One of the biggest questions in AI is: “How can we be sure a model will work on new data it has never seen before?” When assessing model generalisation capabilities, existing methods often only provide a statistical guess. Arthur Clavière (LinkedIn) introduced a formal method that provides more rigorous guarantees of a model’s performance on unseen inputs. It works by systematically breaking down the vast, continuous space of inputs into smaller, more manageable sub-regions. For each of those the method then attempts to prove that the model’s error stays within a predefined tolerance and, if unsuccessful, splits the region into smaller regions again. While the challenge of scaling such an analysis to cover large, high-dimensional input spaces remains, the work is a valuable step towards safer AI. (Link to Paper)

We had an amazing time at SAIV 2025. The discussions and advancements presented in Zagreb reinforce our mission at Safe Intelligence: building the tools to make AI provably safe and reliable. These developments aren’t just academic—they are the building blocks for the future we are working towards.

Zagreb was a beautiful backdrop for a week of intense learning and networking. We connected with great people and exchanged a lot of ideas with others in the field. We’re already looking forward to SAIV 2026!