Kouvaros, P., Brueckner, B., Henriksen, P., Lomuscio, A. (2025), Proceedings of the 39th AAAI Conference on Artificial Intelligence (AAAI25)

Outcome Value

The paper shows advances of state-of-the-art neural network verification by accelerating an algorithm which is used by most verification toolkits. This allows verifiers to scale to even large neural networks, aiding the certification of the safety of neural networks before their deployment.

Summary

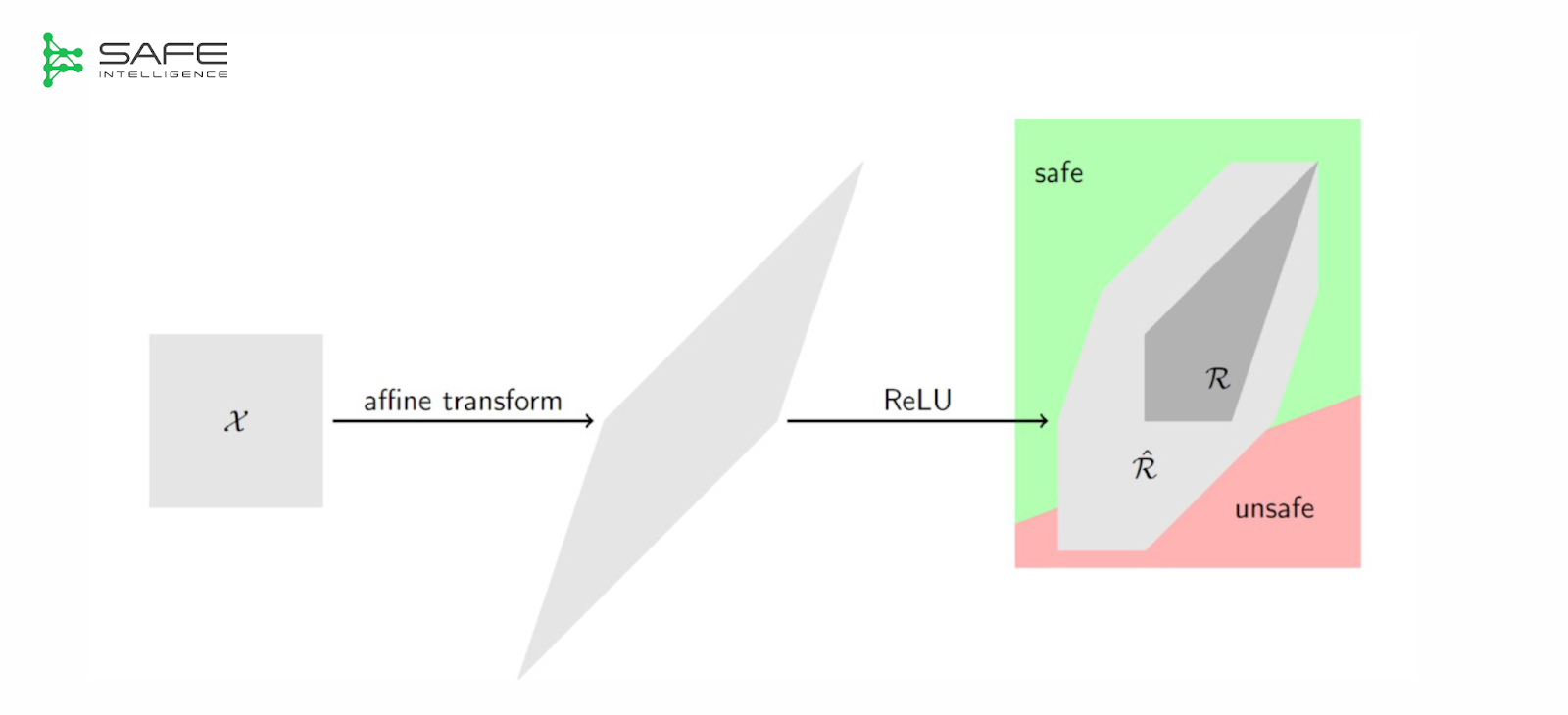

Bound Propagation is a popular method for obtaining bounds for a given layer of a neural network under a given perturbation. While different bound propagation methods exist, back-substitution is the most popular one due to its superior precision. Unfortunately, this precision comes at a substantial computational cost. We propose a method called Dynamic Back-Substitution which accelerates these computations without any loss in precision.

Primary contributions

The paper extends Venus, a neural network verification toolkit, with the dynamic back-substitution method to accelerate the computations run during bound propagation. The specific contributions are:

– The introduction of the dynamic back-substitution algorithm which uses intermediate results from cheaper bound propagation methods to dynamically reduce the size of the equations which are being propagated through the network if one can guarantee that no precision gain is to be expected from running back-substitution for specific neurons

– The design of a heuristic to govern the use of dynamic-back-substitution. The heuristic uses a cheap estimate of the expected gain to decide whether the full back-substitution procedure should be used for a given layer of a neural network

– A thorough empirical evaluation on a number of neural networks ranging from 92000 to 21.8M parameters which demonstrates that our method reduces the required time for running back-substitution by up to 40%

What’s next

Although a lot of progress has been made in neural network verification over the past years, scalability is still the main challenge that these methods face. Our aim is to improve the scalability of these methods even more by not only improving the algorithm introduced in this work and its governing heuristic, but by also experimenting with entirely different approaches to verification.

Link to paper